Mathematical Visualizations

This page is a work in progress.

We are building a research consortium and collaboration around these topics. Physics Foundations Society is a registered association (3066327-8) in Finland. Current research partners include a project in the philosophy of physics at the University of Helsinki and the Cosmological Section of the Czech Astronomical Society, where Suntola is a foreign member.

On this page

Interactive Mathematical Illustrations of Proposed Cosmological Timescales

Historically, zero-energy universe was studied by Arthur Haas, Richard Tolman, Dennis Sciama, Edward Tryon, Pascual Jordan (see Kragh 2015, p. 8, for some context and mathematical formulation), among others (perhaps Dicke, Dirac; see Kragh 2015b). The principle and its specific mathematical form was also mentioned by Richard Feynman in his Lectures on Gravitation, p. 10 – which is based on notes prepared during a course on gravitational physics that he taught at Caltech during the 1962–63 academic year – closing that section with the comment that

All of these speculations [described here] on possible connections between the size of the universe, the number of particles, and gravitation, are not original [by me] but have been made in the past by many other people. These speculators are generally of one of two types, either very serious mathematical players who construct mathematical cosmological models, or rather joking types who point out amusing numerical curiosities with a wishful hope that it might all make sense some day.

The following interactive illustrations are based on a novel interpretation of the zero-energy principle – leading naturally to bouncing cosmologies (see also 1,2,3) and to serious rethinking of the common usage of SI units, especially the second, the metre and the kilogram, along with the various derived units – and its application to physical theory development by Tuomo Suntola as documented in his work The Dynamic Universe (2018).

Assuming that the energies of matter and gravitation are in balance in a finite universe, reminescent of an action principle and analytical mechanics,

which we refine to a differential equation by defining

the following relations hold (see 1,2,3,4,5,6,7,8).

Note that as

It is interesting that by assuming (or deriving from Maxwell's equations, as Suntola has done) that the Planck constant

one attains an exotic cosmology where the frequencies of physical oscillators, such as clocks (which also define the SI second) follow the development of the scale factor

where

So under this model, it seems that one should be quite careful in distinguishing between different timescales (see also cosmic time and age of the universe), as otherwise one may mix units in a confusing fashion. The second is involved in SI units such as joules [kg

Devising a simplistic geometrical explanation for the apparent uniformity of the cosmos (see, e.g. horizon problem) leads one to consider finite, positive curvature geometries and hyperspheres, especially the expanding 3-sphere, for contemplation as the global zero-energy structure of the universe. It has many desirable mathematical properties, such as it supporting exactly three linearly independent smooth nowhere-zero vector fields, allowing consistent global rotations and making crosscuts along the great hypercircle arc between any two points in the ordinary three-dimensional space (the volumetric surface of the 3-sphere), still having that extra zeroth hyperdimension along the hyperradius as a degree of theoretical freedom at every point of interest. For more information about these spaces, see my presentation on Clifford algebras and other topics at the Physics & Reality 2024 conference (and 1,2,3,4). Note also how from the viewpoint of general relativity, Sean M. Carroll mentions that in the Robertson-Walker metric on spacetime, for the closed, positive curvature case “the only possible global structure is actually the three-sphere”. Let's also appreciate that the current observed spatial flatness (cosmological curvature parameter) is inferred under the standard cosmology model, but if the model changes, so may also the interpretations of observations regarding the shape of the universe.

So combining, the assumptions lead from having the motion and gravitation in a fascinating eternal balance (Fig. 6 below), to beautiful logarithmic spirals that the radiative tangential momenta of light may trace with us in a four-dimensional hyperspace, see Fig. 7 next. The horizon problem is thus inverted, and while the observable universe can still be conceptualized around us, from a hypothetical hyperspace perspective everything (including us) is really at the “edge” of the multidimensional space, which is developing at tremendous velocity towards future possibilities, while also grounded by everpresent gravity and various consequences of past actions (but note that causality is a difficult subject; classical physics is clearly being preferred here, and contingencies are being investigated). Under this model, when we look out into the distant space (tangentially), we are actually looking in to the center of the hyperspace – and conceptually also past it towards the eternities.

Zooming in to the present in Fig. 7, and making a variation to the hyperradius (and thus to hypertime), could give us a glimpse how light cones and different foliations (slices or leaves) could perhaps be mapped to this presentation, provided one keeps in mind the distinction between position and momentum spaces (see also various astronomical kinematic velocities and their current estimates, where Solar velocity with respect to CMB is notable; see also comoving coordinates and local standard of rest), as in the gravity model studied here, electromagnetic radiation has momentum only in the tangential direction of the 3-sphere (that is, “ordinary three-dimensional space”) and gets a “free ride” along the expansion, having no rest mass in the vacuum. This crucial idea is studied a bit further in relation to the energy-momentum relations at the bottom of this page.

Also discussions on the nature of causality could get interesting, as the picture above presents naturally the energy and information being attainable only as conveyed by the logarithmic spirals at the speed of light (at a maximum), but there are also other geometric relations present – due to the expanding spherical space, spirals have met in the past and will meet also in the very distant future, even if at the present most light cones seem separate. Also the model suggests that there is a theoretical possibility for some scalar potentials being instant across the universe “right now” in some sense, similar as in standard gravity the static field potential is “instant” (the force between inertial charges pointing towards the instant location, not to the retarted location, due to how the Lorentz-transforms operate, also in general relativity, but see [1,2,3,4]). As a force is the gradient of energy, and changing the energy necessitates conveying (abstract) mass (under this model), that can only propagate at the speed of light, these questions about the character of physical potentials, principle of locality, and possible “action at a distance” are complicated and under study. Already that short note about action at a distance contains suggestive ideas, such as John Wheeler and Richard Feynman “interpret Abraham–Lorentz force, the apparent force resisting electron acceleration, as a real force returning from all the other existing charges in the universe”, reminescent of Mach's principle, but for electrodynamics. In the model under study here, it is assumed that as one cannot suddenly move any mass instantly, there is also necessarily always some slowness and inertia with regard to moving (or changing) potentials, irrespective of their range. These important but difficult structural questions are also discussed a bit more nearer to the bottom of this page.

Notice that in that same circle crosscut view of the hyperspherical space, there is the prediction for apparent brightness

the actual form of which is still contested. Note that there is the added complication of greater energetic state of the earlier universe, which affects both absolute luminance

This very constrained and almost parameterless form fits very accurately to Type Ia supernova observations (Lievonen & Suntola, in preparation, see Fig. 9a), or at least as accurately as luminosity distance in standard cosmology, but there has to be extra

The following is a research preview (Lievonen and Suntola, in preparation):

All in all, if this total geometric model turns out to be valid and useful in many contexts, it could have interesting consequences for our discussions about time, space, and motion in general. For example, the so-called cosmological time dilation, where supernova light curves are empirically observed as taking a longer duration in the past (dilated by

It seems that it is standard practice to use both the stretch factor (displayed above in Fig. 10a), and the so-called color in regression analysis when producing the distance modulus magnitude data displayed in Fig. 9a (see Scolnic et al. 2022, p. 4, from Pantheon+ papers):

Each light-curve fit determines the parameters color (

), stretch ( ), and overall amplitude ( ), with , as well as the time of peak brightness ( ) in the rest-frame B-band wavelength range [emphasis added]. To convert the light-curve fit parameters into a distance modulus, we follow the modified Tripp (1998) relation as given by Brout et al. (2019a): where

and are correlation coefficients, is the fiducial absolute magnitude of an SN Ia for our specific standardization algorithm, and is the bias correction derived from simulations needed to account for selection effects and other issues in distance recovery. For the nominal analysis of B22a, the canonical “mass-step correction” is included in the bias correction following Brout & Scolnic (2021) and Popovic et al. (2021). The and used for the nominal fit are 0.148 and 3.112, respectively, and the full set of distance modulus values and uncertainties are presented by B22a.

It could prove out to be problematic that the distance and redshift is used implicitly (via stretch and color) already in the data releases, where the cosmological models are then fitted, instead of predicting the observed spectral energy flux densities directly (as would be preferable in physics). It seems that when comparing different cosmological models (using predicted brightnesses and their inferred distances) using SNIa data, some theoretical assumptions may have been baked in already, as the language mentioning rest-frames also suggests in the above quote. Observe also how Anderson (2022, p. 2) motivates K-correction:

The K−correction accounts for the difference between the (observed) apparent magnitude of a source and the apparent magnitude of the same source in its comoving (emitter) inertial frame. [emphasis added] The difference is caused by two effects: a systematic shift of the spectral energy distribution (SED) incident on the photometric filter (bluer parts of the emitted SED pass through the photometric filter), and a dimming effect due to the fixed-width filter appearing compressed when viewed from the source. [emphasis added]

The luminosity distance itself should take care of all the physical attenuation factors during the expansion, so it would predict the observations. Now it almost seems as if using the co-moving distance in the luminosity distance causes extra

It would be illuminating if the

Contrary to standard cosmology, employing these novel brightness-redshift-scale-age relations under study on this page here, many observations of the James Webb Space Telescope might make more sense under this model: instead of “too early” galaxies at redshift

Various distance measures utilized in reasoning about the dimensions of the observable universe would get updated; the comoving distance would be related to the length of the hyperradius-normalized circular arc in the logarithmic spiral plots above (Fig. 7), and the so-called proper distances would be then related to the non-normalized, expanding arcs between the points of interest.

Instead of integrating out the light-travel distance, the optical distance

Furthermore, a more precise cosmological angular measure

where

What is highly intriguing (and needs a thorough investigation) is that when interpreting observational data using these geometric angular measures, it seems that many astronomically interesting objects, instead, could turn out to be expanding with the space, as the apparent angular size is then

due to the (hypothetical) current diameter

The following figure displays work-in-progress in interpreting these angular measures.

- Blue curves predict observed angular sizes of objects that expand with the space. So under this model, it seems as if galaxies expand with the space, which would be contrary to what is the long-time consensus in astrophysics.

- Red curves predict the angular sizes of constant objects. There are various alternative versions for research purposes. The one with a turnover point is the angular diameter distance in standard cosmology (ΛCDM), which does not seem a good predictor for this particular data.

- The possible 3-sphere lensing effect M = ln(1 + z) / sin(ln(1 + z)), predicting peaking at antipodal points, is under study. Note also that such magnification could affect the inferred velocities of distant phenomena.

- The data (open circles and black dots) is from Nilsson, Valtonen, Kotilainen, and Jaakkola (1993, p. 469, Fig. 5). (See also FINCA)

- Interpreting the angular sizes (observed sizes in calibrated pixels) from JWST are under study (the dim ellipsoid at the bottom). There are various factors which can affect the interpretations. There is material, for example, in [1] and [2].

So in this model, the galaxies and planetary systems could be expanding after all (along with the rest of the space, as so-called kinematic and gravitational factors are conserved in this gravitational model), and so would the stars and planets as gravitationally bound objects to some extent. “Electromagnetically bound” systems (Suntola articulation), such as atoms, would not expand along with the space, but “unstructured matter”, which also light represents due to wavelength equivalence of energy of radiation in this framework, would expand and dilute with the space, dictated by the presented zero-energy principle in the evolving universe. See also the works by Heikki Sipilä, such as Cosmological expansion in the Solar System. Finding out the consequences and implications (using the understanding of Suntola framework) is a difficult task, involving thermodynamics and contemplations about elastic SI units, among other interesting questions.

Interactive Mathematical Illustrations of Proposed Local Timescales and Gravitational Effects

The following displays various important proposals contained in Suntola's work. Visualizations are under development.

Local gravitation, in an idealized setting where there is a mass

So the binding (decreasing) effect of local gravitational energy

The tangent of the hypersurface crosscut is then

which can be integrated to arrive at the hypersurface crosscut shape of

or integrating from a reference distance

Let's also note that in general relativity, the Schwarzschild solution differs from the above by a square root and a factor of two in the critical radius, so integrating GR solution to model the “bending of spacetime in extra hyperdimension” so that the local projection

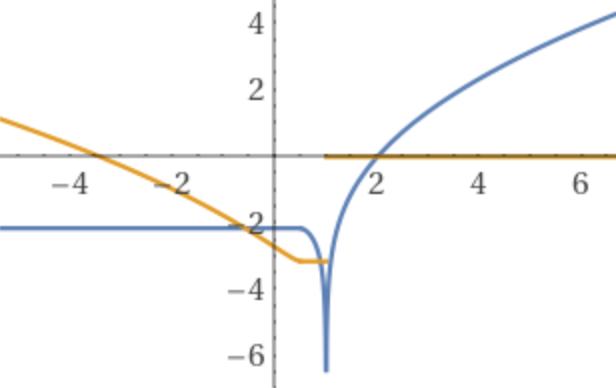

The following illustrates the resulting hypothesized “dent”

The plots below display various velocities related to this gravity model. They are ordered from more global timescale to more local. Specifically, the uppermost plot (Fig. 12a) displays velocities with respect to the flat space (static observer at rest far from the critical radius, but ignoring light propagation delays). From that perspective, which is actually the most common one when we are almost always modeling physical phenomena from far away, the speed of light (middle yellow line) slowing down near mass centers is not too exotic, as that is also the prediction in general relativity. Note, however, that various forms of the equivalence principle are not taken as axioms here, and will most probably not hold in these strong gravitational fields in this gravitational model. The middle diagram (Fig. 12b) relates then the velocities to that local time standard (proper speed of light, that middle yellow line), which changes with gravitational state (distance

In blue, there is the velocity of free fall (equivalent to escape velocity), which is related to the sine of the angle of rotation of the hypersurface volume. The velocity of free fall saturates at the speed of light at the critical radius, which seems very nice and regular. However, do note how the “4D-well” (black line in the earlier Fig. 11) extends arbitrarily far in the direction of the hyperradius, possibly even to the origin of the hyperspace where different black holes could be connected (at least in the early history of the cosmos). But as the hyperspherical space is expanding and the hypersurface volume is thus developing vertically in the crosscut picture at the speed of light (by definition of the hypervelocity), it seems likely that a falling object can at maximum stay at the same absolute hyperradius distance, not travel “backwards in time” to the origin. Also the horizontal and vertical components (gray lines) of the escape velocity have been plotted for convenience. The interpretations of the Schwarzschild coordinates (dashed lines, and accompanied velocities) should be treated with caution here, as only some of them are exactly known from GR studies, and in different coordinates (such as using null geodesics), the meaning of critical radius would change considerably. Some Schwarzschild velocities, such as free-fall coordinate velocity (dr/dt) and orbital velocities (red dashed lines, in their “distant observer” coordinate form, “hovering” observer proper form, and an orbiting observer proper form), should be exact according to our knowledge, but even they may contain errors, because we have conflicting information from text books (see also 1,2,3,4). It is of interest that in Schwarzschild coordinates, the Newtonian orbital velocity depicts also the orbital velocity in coordinate time (for a far-away observer), but cannot support stable circular orbits nearer the critical radius (as depicted by the abrupt ending of red dashed line at the photon sphere).

Some special points of Schwarzschild geodesics have been highlighted in Fig. 11, such as between 1.5 (so-called photon sphere) and 3 times the Schwarzschild radius

In the gravity model studied here, orbital velocities (red line, in circular orbits) stay nice and regular down to the critical radius (Suntola 2018, pp. 142–163). Maximum orbital velocity is attained at four times the critical radius (Fig. 12a). Relative to gravitational state at a distance

which can be compared to Kepler's third law of planetary motion. In the limit, as

For a local free-falling (non-inertial) observer, the situation is complicated as the velocities seem to increase without limit (as reference frequencies decrease towards zero, affecting SI second), but at the same time the SI meter expands (both definitionally and physically, in this model), thus the observational velocities being inferred as constant, and further distances being measured as shrunk as the light travels a longer distance in the observationally same time frame. Also for an observer in a circular orbit (red line in the above figures), the situation is quite remarkable in lower orbits, as the reference frequencies and oscillators come to a standstill the closer one orbits the critical radius, so distances are inferred as warped and shunk in a complicated but perhaps manageable way, but note that the actual orbital velocities seem to come to a standstill, so the kinematic term is approaching unity (no kinematic time dilation). Matter seems to dissolve into some exotic form of mass-energy. Suntola claims that these kind of slow orbits (see the same red line from a point of view in the distant flat space in Fig. 12a, and compare to the spatial picture in Fig. 11) maintain the mass of the black hole – it is quite a different picture than a pointlike singularity, as here photons can climb very slowly also up from the critical radius (which is half of that of the Schwarzschild event horizon).

Some claims, such as the escape velocity having a well-behaving form down to critical radius, could simplify the common physical picture in the long term. However, the velocities plotted to local time standards imply some rather exotic physics, where the velocity of free fall can meet and exceed the local proper velocity of light (which is decreasing near mass centers in this gravitational model), as Suntola claims that in a free-fall, the relativistic mass increase is not necessary, as the energy is taken from the hyperrotation of the space itself (maintaining the zero-energy principle). It could mean that on the atomic level, new kinds of mass-energy conversions could be possible around black holes around critical radius, that are perhaps hitherto undertheorized. Distance

It is also interesting to plot the surface integral outside of its domain of applicability using complex values, where mathematically the surface seems to have imaginary values (orange line) starting from

As a mathematical curiosity, also the period formula shown previously can be plotted in complex domain, where the period is pure imaginary inside the critical radius.

When operating far from the critical radius (

which can be used to approximate the volumetric surface shape (the hyperradial dimension) in many calculations. For example, Suntola utilizes semi-latus rectum ℓ as a reference distance

during an (approximative) Keplerian orbit.

It is interesting how the resulting prediction for the rate of period decrease of two bodies orbiting one another (thus emitting gravitational radiation) seems more compact in this gravity model than in general relativity, even though they are both employing approximations. The DU solution is (Suntola 2018, pp. 162–163)

whereas GR presents

which are perhaps surprisingly similar (which is reassuring, as they are derived using quite different means). However, they have very different predictions when orbital eccentricity

I recommend taking a moment here contemplating the gravity of the above statements.

With this construction, the “rest momentum”

Solving the above for

When taking the gradient of the scalar potential – again in an idealized setting around a single large mass, as is usual when analysing orbital dynamics (sums of these potentials has not been analyzed apart from discretizing the space to nested energy frames) – it seems that local gravitational force gets augmented with a cosine factor due to distance

In addition to distance

Here, instead, the form of signal propagation duration between radial coordinates can be calculated using (see also)

which gives very similar predictions when far from critical radius (and can be truncated to simpler algebraic forms).

It is also quite straightforward to derive predictions for orbital periods using this kind of geometric reasoning, as Suntola has done, and that was also briefly displayed above.

We are also studying the implications of the above to relativistic mechanics, where it is well-known that Newton's second law is modified to

whereas in the model studied here, it seems that it is further modified with the effect of local gravity (

which could have implications for the equivalence principle, but needs further study to disambiguate the scalar, 1D, 2D, 3D, and 4D components, and analyze the possibly varying measurements units involved.

These discussions on hypothetical reduced rest momentum and local timescales (variable proper speed of light, as observed from the distance), lead us to kinematic and gravitational time dilation, that are both routinely taken into account in satellite operations. To see the components, study, for example, these two images (from popular sources [1,2], with texts and markings kept verbatim, and the model studied here plotted on the image):

Note that the experimentally confirmed slight gravity speedup (gravitational time dilation) of clocks seems to imply that the speed of light is necessarily also slightly higher up there, as the “speed of light in a locale is always equal to

The above pictures are present even in the Lunar distance calculations. Often data analysis summaries mention exotic considerations such as Lorentz contraction of the Earth and the Moon, but fail to emphasize that Shapiro time delay is routinely added to computations (see Battat et al. 2009, p. 34), which enables calculating with a hypothetical constant speed of light:

The range model used to analyze the data presented here [for millimeter-precision measurements of the Earth-Moon range] was built upon the publicly available JPL DE421 Solar System ephemerides, from which the positions and velocities of the centers of mass of the Earth and Moon (among other Solar System bodies) can be interpolated. In addition, the range model estimates the position of the ranging station with respect to the center of mass of the Earth and the retro-reflector array position with respect to the center of mass of the Moon. The relativistic Shapiro time delay of light is included [emphasis added], as is the refractive delay through the Earth’s atmosphere, as prescribed by Marini & Murray (1973), though annual averages of the meteorological data, rather than their instantaneous values, are used. The range model, however, is incomplete and can produce drifts in the O−C residuals [...]

So it seems that in actuality, many pictures where light is depicted as propagating with a constant velocity in space (see, for example, 1, 2), are misrepresenting the reality − what is actually observed, and how it is also theorized in general relativity, imply that speed of light slows down slightly near large masses, and radial distances are stretched due to curvature of space. Unfortunately the Shapiro time delay or its meaning for speed of light measurements is not discussed at all in the most definitive SI standards documentation, such as the Mise en Pratique for the definition of the metre in the SI (search for “lunar” and “Moon”), prepared by the Consultative Committee for Length (CCL) of the International Committee for Weights and Measures (CIPM). It is as if the incorrectness of calculating with a constant speed of light is not realized even by most experts. Our current diagnosis is that the necessary procedures routinely applied in scientific instrumentation and technologies of measurement, have been abstracted with terms such as "Shapiro time delay" or "relativistic corrections", which obfuscates their important physical implications for the phenomena under study. The effects of gravitation are small, but they seem to exactly then match with observed gravitational redshift also near Earth surface (but there are dual accounts with respect to changing propagation velocity or changing frequency, depending on the perspective used).

Note also that the orbital speed slowdown (kinematic time dilation in Figs. 14a,b) is not calculated with respect to each observer, but with respect to the Earth-centered inertial (ECI) coordinate frame (see, for example, geocentric celestial reference system (GCRS) and Geocentric Coordinate Time TCG). All the system components are eventually referred to a common coordinate time scale. So judging from this picture, it seems quite evident that the reciprocity of time dilation is not true in most physical settings (outside of thought experiments in special relativity, or in experiments where the temporal and spatial scales are so small that these effects can be ignored in some otherwise symmetric setting as if the space was empty). According to these pictures, if a clock is taken to a higher satellite orbit where it has less orbital velocity, its hyperfrequency will be sped up (interpreting the kinematic factor), and if a clock is lowered to a lower satellite orbit where it needs to have more orbital velocity to stay on orbit, its hyperfrequency will be slowed down (again interpreting just the kinematic factor). This happens from the point of view of this common frame irrespective of any observers, and there cannot be reciprocity of kinematic time dilation here, as observed from these satellites (or anywhere else, for that matter), the situation cannot somehow reverse without major paradoxes appearing down the line. With a slowed down clock, one necessarily measures the other velocities as higher, not the other way around as would be needed for reciprocity. We are happy to discuss any empirical evidence proving otherwise, but for now, we do not have reasons to believe that warping or contracting the space, rotating coordinate systems, or some other hypothetical effect would somehow salvage the reciprocity here. So for now, these are treated as common confusions due to apparently mixing event-centric kinematic and properly system-centric dynamic (i.e. energy conserving) descriptions, and will be studied later on this page.

When a signal is transmitted to or received from space, also Sagnac effect is important. When discussing GPS, McCarthy and Seidelmann (2018, p. 273) tell simply that

The third effect [in addition to the gravitational and kinematic effects] is the Sagnac delay, which is caused by the motion of the receiver on the surface of the Earth due to the Earth’s rotation during the time when the signal is on its way from the satellite. This delay is computed in the GPS receiver [...] [and] can be as large as 133 ns.

There are many ways the treatment of Sagnac delay is motivated in the literature, but Suntola has calculated that treating it simply as the lengthening of the propagation path during the transmission of the signal due to the receiver moving along with the rotating Earth, results in the most straightforward mathematics and its physical interpretation.

So it is quite evident that those Figs. 14a and 14b certainly warrant studying and discussion. What is the role of the approximations and structural explanations here (Lievonen & Suntola B, also in preparation). The images are in weak gravity (where the gravitational factor is near unity), and also still quite far from relativistic velocities (thus the kinematic factors are also near unity), but the components of time dilation are already empirically visible. In mathematical terms, it is then interesting that calculating the total time dilation (in blue) using a sum of time dilations (the conventional way),

and using a factorized structure instead (the model studied here),

really result in practically equivalent predictions, but very different interpretations of experiments, different theory structures, and different extrapolations to strong gravitational fields and associated high kinetic energies.

As a brief of things to come in terms of the hypothetical gravitational model studied here, the hyperfrequencies of clocks and oscillators are predicted to follow the reduction in local “rest momentum”, due to both motion and gravitation effects in the local “energy frame” (see again Fig. 14a), which is regarded as an objective and totality-oriented, as opposed to observer-oriented, concept.

The reduction is modeled as two factors (kinematic and gravitational), formally affecting the “rest mass” and “speed of light” separately, but actually modulating the “rest momentum” as a total:

Note the striking similarity (when multiplied by

It is related to kinetic energy, potential energy, and total energy, and it makes the relativistic action functional proportional to the proper time of the path in spacetime.

Note that the critical radius

whereas in DU space, the above could be interpreted as

which has a nice quadratically symmetric aesthetic to it. It could be related to the so-called harmonic and isotropic coordinates that Steven Weinberg utilized in his impressive work on Gravitation and Cosmology (1972), but this connection has not been studied properly yet. In the above comparison, the direction of the approximation (which one is more accurate on theoretical and empirical grounds) is contested (see Suntola 2018, p. 158). In DU, the

The following diagram illustrates how close the approximations are to each other, especially when far from critical radius.

In the Schwarzschild metric, it is well-known that one can study the time and space coordinates separately in idealized situations. For example, by setting spatial differentials to zero (

which in DU proposition is

It depicts the gravitational time dilation at a distance

where

Also for the spatial coordinates the same treatment (setting now

which in DU proposition is

Here we could have divided the metric with local

showing how the radial distances stretch along the hypothetical hypersurface, when the model is embedded in the extra spatial hyperdimension.

Both of these effects – gravitational time dilation, and radial stretching of space – were depicted before in Figs. 11 and 12. Let's acknowledge that this is very unorthodox treatment of the metric here, and as the actual tensors have not been decomposed here with matrix square roots, it is also very non-rigorous. Also treating spacetime intervals as compared to the coordinate time in the distance (as opposed to between different relative observers), may not point out all the difficulties here, but also in standard treatments the

Also usually the radial light propagation is calculated by using null geodesics (setting proper time

which results in (see also)

but it is then the same as simply projecting the light propagating along the hypersurface at velocity

Thus this decomposition here seems to result in comparable analysis to relativistic effects, as the light geodesics seem to follow the hypersurface with empirically correct time delays, for example. Thus we feel that in spite of this quite evident incompleteness here compared to the body of knowledge accumulated during the decades on research on general relativity (such as differential geometry and spacetime basis transforms), there may be something really relevant about physical relativity here, as also scalar projections are often indicative of the systems under study (compare to eigenvalues and eigenvectors, and the symmetric and inner products are prevalent even between multidimensional spaces). Thus the research program here is continuing.

One could take a look at the local and delayed velocities in Schwarzschild geodesics, keeping in mind that in DU space,

, as the critical radius is half of , , where the usage of local velocity is not completely clear (and when nesting energy frames, it gets more complicated as the in gravitational factor is more accurately , where the usage of is even more complicated), - momentum is decomposed into orthogonal components in hyperspace which can be locally hyperrotated, so often projected with

or its inverse (in contrast to the radial component and scalar time), while the space itself is expanding in the hyperradial direction at the hypervelocity , - signals propagate at local proper speed

along the volumetric surface dictated by the zero-energy condition, thus being affected by both the decreased velocity by and greater radial distance due to the curvature (which results in the empirically correct Shapiro time delay), while the emitters and receivers are in different energetic states which also affect observations, and - in abstract free-fall starting from far away, motion and gravitational factors become equal (reminescent of the equivalence principle, where “gravitational time dilation

in a gravitational well is equal to the velocity time dilation for a speed that is needed to escape that gravitational well”, but not exactly the same), , thus allowing simply squaring the gravitational factor to get the factor , relating the predictions to local time standards, as was done in some of the velocity plots above. This detail could be the key in interpreting the differences between the standard gravitational models, such as GR, and the model studied here, as here the free-fall is only gravitationally present at each distance , not always kinematically. Relativity allows defining any reference frame, and here we are most often not looking from the point of view of a local, freely falling reference frame, which is treated more like a special situation here. Inspect these early images to get a glimpse of the ideas under study: a, b, c, d, e.

This list above is by no means exhaustive, but displays the difficulties ahead due to accidental complexity, which results almost by definition from applying apparently simple ideas (in isolation) to complex problems. Iterating and recursing on partially overlapping concepts and definitions may hinder seeing the essence in the ideas, which in the case of DU almost always stem from the zero-energy principle in a spherically closed space. Also, the scope of conceptual problems with respect to comparing the formulas, is under study, as the underlying postulates about the nature of time, space, and motion are obviously quite different in different propositions, which each naturally aim to be internally coherent and valid without compromising on empirical correspondence and relevance. As an example, the equivalence principle is most often formulated in terms of derivatives (forces), where the boundary conditions are then lost (or they are forbidden, see discussion on absolute space and time), whereas Suntola thinks that energies (and thus integrals, where forces are then derivatives or gradients) are fundamental, and then boundary conditions are essential (however large or miniscule, such as global curvature, they may be, but they are there and may be necessary for making theoretical progress).

The alleged rather complicated nesting of the common energy frames proposed by Suntola (2018, p. 125 for example) and consistency of the procedure in terms of both spatial and temporal effects is not yet clear. There is considerable interest in understading various experimental setups and their theoretical interpretation (see 1,2,3), as carried out under the umbrella of modern searches for Lorentz violation, or studying the physics of Michelson-Morley resonators, for example. See also the classical internet resource on the experimental basis of Special Relativity from The Original Usenet Physics FAQ, hosted by John Baez.

Do note that even though “In small enough regions of spacetime where gravitational variances are negligible, physical laws are Lorentz invariant in the same manner as special relativity” (see Lorentz group), the thought-experiment regions where the gravitational potential is constant are shrinking rapidly due to empirical results enabled by recent advancements in technologies of time measurement. Optical clocks resolving centimeters in height are technically ready for field applications, such as monitoring spatiotemporal changes in geopotentials caused by active volcanoes or crustal deformations, and for defining the geode (see, for example Takamoto, M., Ushijima, I., Ohmae, N. et al. 2020).

As for the physicality and reality of relativistic effects – see Pulsar Timing Arrays, for example, which seem to empirically imply that

[T]he fact that the Earth is moving on an elliptical orbit around the Sun, and hence at varying distances [and at varying orbital velocity], means that every clock on Earth experiences a varying gravitational potential [and thus varying energetic state] throughout the year. This leads to seasonal changes in clock rates that affect all clocks on Earth simultaneously. Astronomy can, hence, provide an “independent” time standard that is unaffected by effects on Earth or in the solar system. The key in providing such an astronomical time standard are objects called “pulsars”. Pulsars are compact, highly-magnetized rotating neutron stars which act as “cosmic lighthouses” as they rotate, enabling a number of applications as precision tools. (Becker, Kramer and Sesana 2018)

In Suntola studies, these effects are considered as physically here and there at this very moment, meaning that one would need to really ponder on the meaning of the SI second (and accompanying metre, kilogram, and other fundamental units) with respect to these observations. Consider also Fig. 14b, where satellites are orbiting Earth, but the same effects are present when Earth is orbiting Sun, and we are making experiments from the point of view of the "satellite Earth" with globally dilated time. As the speed of light is empirically measured as constant and isotropic, but using evidently varying clocks, it would imply that the proper speed of light at a distance changes from the inertial frame of reference point of view. Suntola has studied the idea that going further into larger and larger spatial and temporal scales, there is a specific kind of cosmological timescale, where these local timescales could be derived from.

There may be a possibility to model SR length contractions as theory-internal constructs necessary for being able to calculate in locally invariant units, but it would also make some claims of SR, such as symmetricity (reciprocity) of time dilation, suspect on these new empirical grounds. Also some established interpretation of measurements, such as the distance between the Moon and Earth being constant during the year, would actually seem to imply that the distance increases when the Earth is closer to the Sun, as otherwise it would not be observed as invariant, when taking into account the effect on clocks of varying gravitational potential and additional orbital velocity affecting the energetic state here on Earth. These serious propositions are under investigation.

Suntola reasons, that as clocks and processes evidently go faster in weaker gravitational field (from the point of view of the barycenter of solar system, where the Pulsar calculations are done), it would imply that the effects should be considered physical. He then proceeds to predict that all matter expands uniformly at velocity (in the respective energy frames with respect to their center-of-momentum frames), so the observations are physically understandable. One way to understand this is by looking at the algebraic chain of relations from the Compton wavelength

where

In addition to the kinematic effect, the effect of varying gravitational potential is modeled in Suntola model as affecting the energy of matter too, where algebraically speed of sound in condensed matter is directly proportional to local

Do note that Trachenko explicitly tells that velocity of sound (pressure) in condensed matter does not depend on

See also the formula for the frequency of a Cesium atomic clock in Uzan (2003), Eq. 81,

which is directly proportional to

which needs studying with respect to

Interactive Mathematical Illustrations of Proposed Local Energy-Momentum Relations

Matter is modeled as having wave properties, where usage of reduced masses or gravitational effects is not yet always clear, possibly due to historically evolutionary development of the models. There is a promise to unify Compton wavelength and de Broglie wavelength interpretations under a single mass concept, using “intrinsic Planck constant”

and any momenta, correspondingly, a wavelength equivalence

where

Suntola uses the geometric properties of an enormous expanding hypersphere in four-dimensional space to suggest that the reason that a small hydrogen atom can emit 21 cm wavelength light may have its physical basis in this hyperradial expansion at the speed of light, where the wave equivalence of mass and energy may gain new physical meaning, as any point source could trace a path like an elongaged isotropic monopole or dipole antenna in 4D (orthogonal to all 3D spatial directions) due to this sustained “hidden” movement even at rest. Also the geometries of Dirac matrices, which represent 4+1 dimensional Clifford algebras (isomorphic to 2+3 and 0+5 dimensional algebras), could suggest the same. Interactions (emissions and absorptions) would be wavelength-selective (and frequency-selective), not energy-selective, as can perhaps be motivated by using the definitions above:

This idea would be of great importance when utilizing relations (such as studying wave equations or simply using

Doppler formula for observed frequencies in the Suntola framework (see Suntola 2018, pp. 183–193) is

where the gravitational effect (redshift or blueshift), transversal effect (kinematic time dilation), and classical Doppler effect for both sources

In the thinking of Suntola, photons (and the related Planck relation) are related to interactions (i.e. emissions and absorptions), not so much to propagation in vacuum, which changes interpretations of various observations considerably (but note that quantum mechanical descriptions of beam splitters, photon statistics, and many other crucial phenomena have not been analyzed here). For example, then the emitted wavelength is independent of the gravitational state of the emitter (as frequency and speed of light are linked), and the observed gravitational blueshift, where the wavelength is observed as decreased deeper in the Earth gravitational well, is simply due to the speed of light being decreased accordingly (that is also communicated by gravitational time dilation as the speed of light is invariant for a local observer), and thus the oscillations clumping closer together, observed locally as increased frequency even though energy has not been gained. It is a drastically different picture than the usual conception of photons gaining energy when propagating deeper in a gravitational field, even though the local observations are otherwise identical. Note that the reasoning may be more complicated with respect to light emitted in the cosmological past, as there both

The following illustrations are under construction, displaying the partial equivalence of conventional four-vector (see four-momentum) formalisms with this 4-dimensional Euclidean momentum space utilized here (at least in the hyperplane crosscut of the momentum space), especially the relativistic energy-momentum relation, the mapping facilitated by the Gudermannian function:

The above interactive figures display hyperbolic (standard in relativity, see also Dirac matter), parabolic (related to nilpotents, dual numbers and thus automatic differentiation), and Euclidean (quite common-sense local view on the volumetric surface of an expanding three-sphere) intepretations of concepts such as the total (relativistic) energy (in red), kinetic energy (red outside the unit circle), relativistic mass increase (in tangential direction due to supplied mass-energy), rest energy (in center-of-momentum frame), “reduced rest momentum” (hypothesized hyperradial effect of motion), “inertial work” (hyperradial component of kinetic energy against the rest of space), etc. I recommend studying the two formulas near the bottom of the page for some insight to the idea of nested energy frames from an internal and external point of view.

Velocity addition formulas (of rapidities or hyperbolic angles) are hypothesized to get augmented by addition of space-like momenta, with energy bookkeeping for time measurement in each nested energy frame. The following figures visualize the effect of a transparent moving (and non-magnetic) medium on light (see Fizeau experiment) in Suntola framework, with adjustable refractive index (vertical dot in the diagram). There are two horizontal black lines: one is the velocity

It is quite amazing how close predictions the relativistic velocity-addition formula and this momentum-space construction above give (when

Photons (electromagnetic radiation) do not carry rest momentum (in vacuum), so their momenta is pure spacelike (horizontal in the Euclidean picture, isotropic lightlike in hyperbolic picture), and they get “free ride” along the hyperspace expansion without carrying complex momentum – compare to aforementioned logarithmic spirals (Fig. 7) further up on this page.

One may also keep in mind that the kinematic and energetic descriptions are not necessarily equivalent; the same kind of group transformations and symmetries that apply to energy-momentum relations above, do not necessarily have to apply to kinematic (position and velocity) descriptions, especially as in the latter descriptions energy conservation is often violated, not least because gravity is ignored. See, for example, the thought experiments in relativity of simultaneity, some of which may turn out to be quite unphysical, fueled by kinematic descriptions of light propagation and application of Lorentz-transformations to fictional physical settings that do not respect energy conservation. Note also how momentum-space representations are often quite naturally invariant with respect to constant (“background”) translational velocities, being essentially derivatives. Perhaps internal and external clock synchronization in Mansouri–Sexl theory could give further ideas.

Length contraction could be mostly an illusion, as for example muons traveling in the atmosphere could have simply longer lifetimes (as is observed), due to their reduced rest momenta, and as the muon is “not aware” of its “reference clock” running slower, from the point of view of the muon the atmosphere seems contracted in the direction of motion – the ground seems to be reached too early for its usual inferred speed, and length contraction of the atmosphere is needed as a conceptual and calculational tool to understand the situation. Actually according to the latest understanding of DU framework, it seems that Compton wavelengths are increased at velocity, which means that all the usual distance measures such as the Bohr radius and wavelength of radiation increases accordingly, and thus the SI meter [m] grows at speed. This could be a very natural understanding of the situation, where long distances then seem shrunk, but locally (in the same center-of-momentum frame for the moving matter) things seem invariant even though they have expanded from a larger-system point of view. There are great resources on the web where the history of special relativity and discussions among the greats such as Hendrik Lorentz, Henri Poincaré and Albert Einstein on these “real” and “apparent” lengths and times are recollected. So to reiterate, Suntola proposes, instead, that many objects themselves expand at velocity, to keep the physics consistent (as was alluded to also above with respect to a quartz oscillator driving the atomic clock, having an observationally decreased frequency at speed).

Note that in Suntola framework, transforming more complicated geometric objects is not analyzed yet, and a thorough study of the interpretation of various conventional basis transforms and the feasibility of the alleged possibility of ignoring the standard procedures in relativistic physics is needed in the future. In this model, the reason why the Lorentz transforms seem to work symmetrically for energy-momentum relations, is due to the observer actually speeding up to the observed (or the observed slowing down to the observer), in relation to a common energy frame with respect to gravity, thus their references becoming the same as is observationally understood, but from that larger common energy frame perspective, their references (such as reference masses or reference frequencies) have simply changed to be compatible. It is suggested that the two scenarios are not the same in linear units (from hyperspace perspective), contrary to how the Lorentz-transformation (hyperbolic rotation) to the center-of-momentum frame, often seen as stemming from the principle of relativity, is understood at the present.

See my presentation on hyperspherical geometries, for a brief mention on John Avery's work on hyperspherical harmonics (see [1], for example). It seems that atomic orbitals, which are usually represented in position basis, could be related to four-dimensional Euclidean spaces and specifically to hyperspheres (which can be conceptualized around any point on the four-dimensional space, also on the S

For the adventurous, see also the vast work on geometric algebras (Clifford algebras over reals, see also geometric calculus), especially on stereographic projections and spin velocities, by Garret Sobczyk, to potentially gain new insights on the geometric physicality of gamma matrices and related tools of modern physics, unifying matrix and algebraic approaches in search of new interpretations of quantum mechanics. As gamma matrices constitute a 4+1 dimensional Clifford algebra, we are interested in interpretations of them with respect to the expanding S

In the future, these kind of questions could be studied using advanced chain-of-thought language models, but at the moment, their correct mathematical usage is best assessed in textbook problems. The questions on this research notes page deal with cross-category problems, and the various empirical observations have been infused with theory-based interpretations in the literature, so if you manage to formulate these ideas with such clarity as to get dependable results from large language models and other AI tools, we would be most happy to hear about those developments.

Finally, the following are among the most creative and intriguing fundamental propositions in the works of Suntola. The first one decomposes the relativistic momentum above as two opposing reduced “internal” Doppler-shifted radiative momenta (at the speed of light in the direction of motion and against it), which is the antisymmetric or odd part of hyperbolically normalized

For the theoretically-minded, see how the symmetric and antisymmetric parts of the above could be related to the Dirac current (see also action formulation in curved spacetime using shrinking 4-volume, helicity as the projection of the spin onto the direction of motion, zitterbewegung, vector symmetry, and axial symmetry, using pseudoscalar or volume-element

The second identity interprets the dynamic effect of a hyperscalar

Suntola is convinced that the above syntactically true relations will be crucial in understanding the isotropic consistency of nested energy frames in the hypothetical hyperspace and their importance in explaining relativistic effects in a physically meaningful way.

As a summary, studying the implications of the zero-energy universe, as Suntola has done in The Dynamic Universe, together with some assumptions about the hyperspherical geometry, could lead us to more precise understanding of the nature of the physical law – where it could be ever easier to fit the various pieces of observational and experimental evidence together to give a more complete picture of the cosmos. The emerging picture of unified physics could be seamless across the scales, but there is a lot of theoretical work to be done to merge this fruitfully with the various bodies of knowledge. As every model has its domain of applicability, we encourage to consider this project as offering physical, mathematical, and philosophical inspiration in each of their own contexts (for philosophy, see Kakkuri-Knuuttila, for difficulties on communication across contexts and criterias for theory evaluation, see Styrman, for art and personal contexts, see Rembrandt: Philosopher in Contemplation, 1632). Also remember The Art of Doing Science and Engineering: Learning to Learn.

See “Last updated” timestamp below. Motivational first impressions (32 pages, early 2023) is also available.

xkcd.com/2205

xkcd.com/2205 xkcd.com/2207

xkcd.com/2207